A Tutorial@IEEE CoG 2024

Prompt Engineering for Science Birds Level Generation and Beyond

Slide

The slide deck for the tutorial can be found here

Latest update: August 5, 2024 9:45AM (GMT+2)

Prerequisite

If you want to follow along with the tutorial, you will need a computer with sufficient performance. LLMs are downloaded as files and will be stored on your computer and later loaded into RAM, so you will need to have sufficient storage and memory for that purpose. In addition, while the program works with CPU-only computers, its performance is not optimal, i.e., slow processing speed. Therefore, we recommend a computer with a sufficiently powerful discrete GPU, NPU, or M-series chip.

Please download and install the following software before the tutorial if you want to follow along:

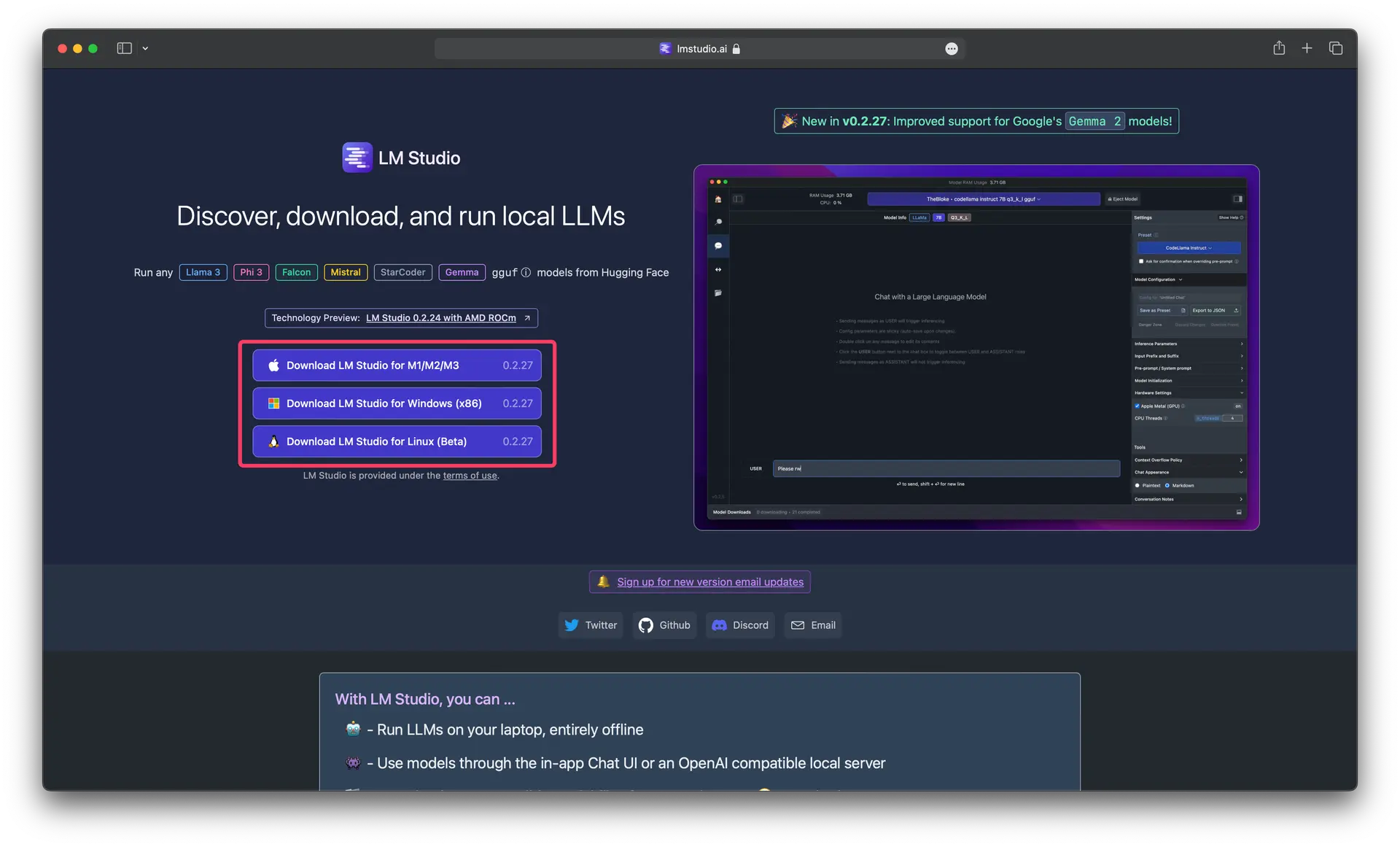

- Download LM Studio from LM Studio

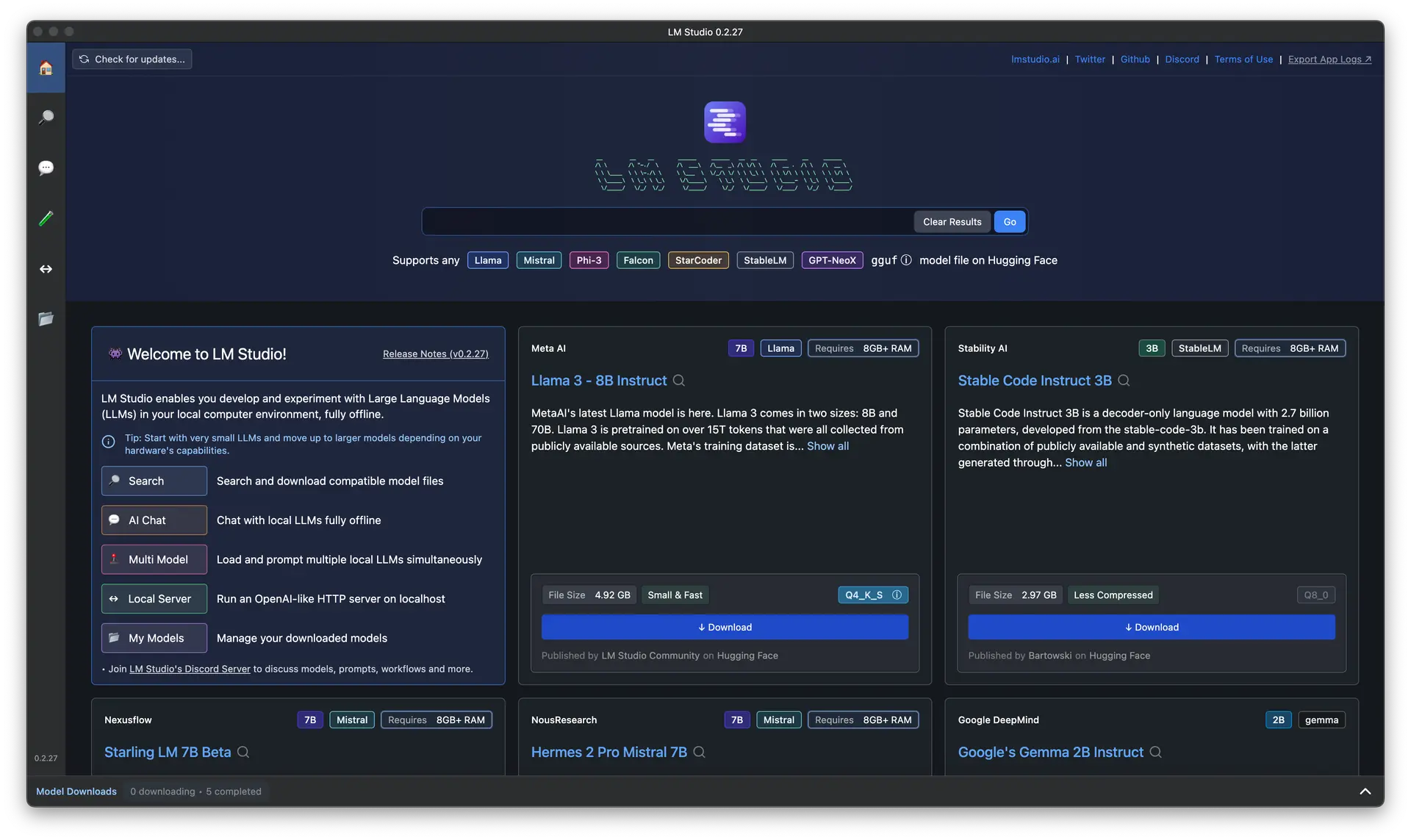

- Open the downloaded file and follow the installation instructions. Once installed, open LM Studio.

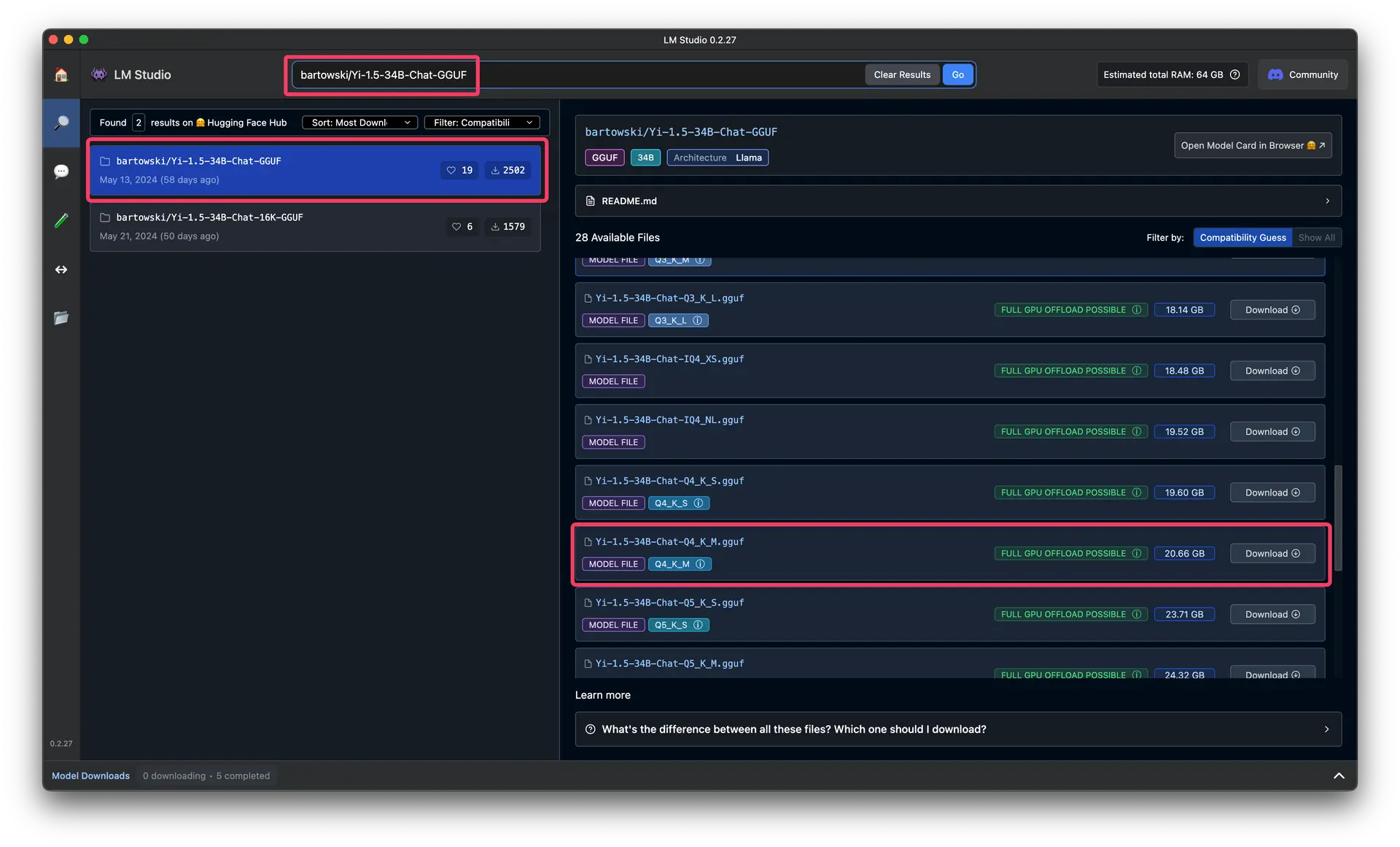

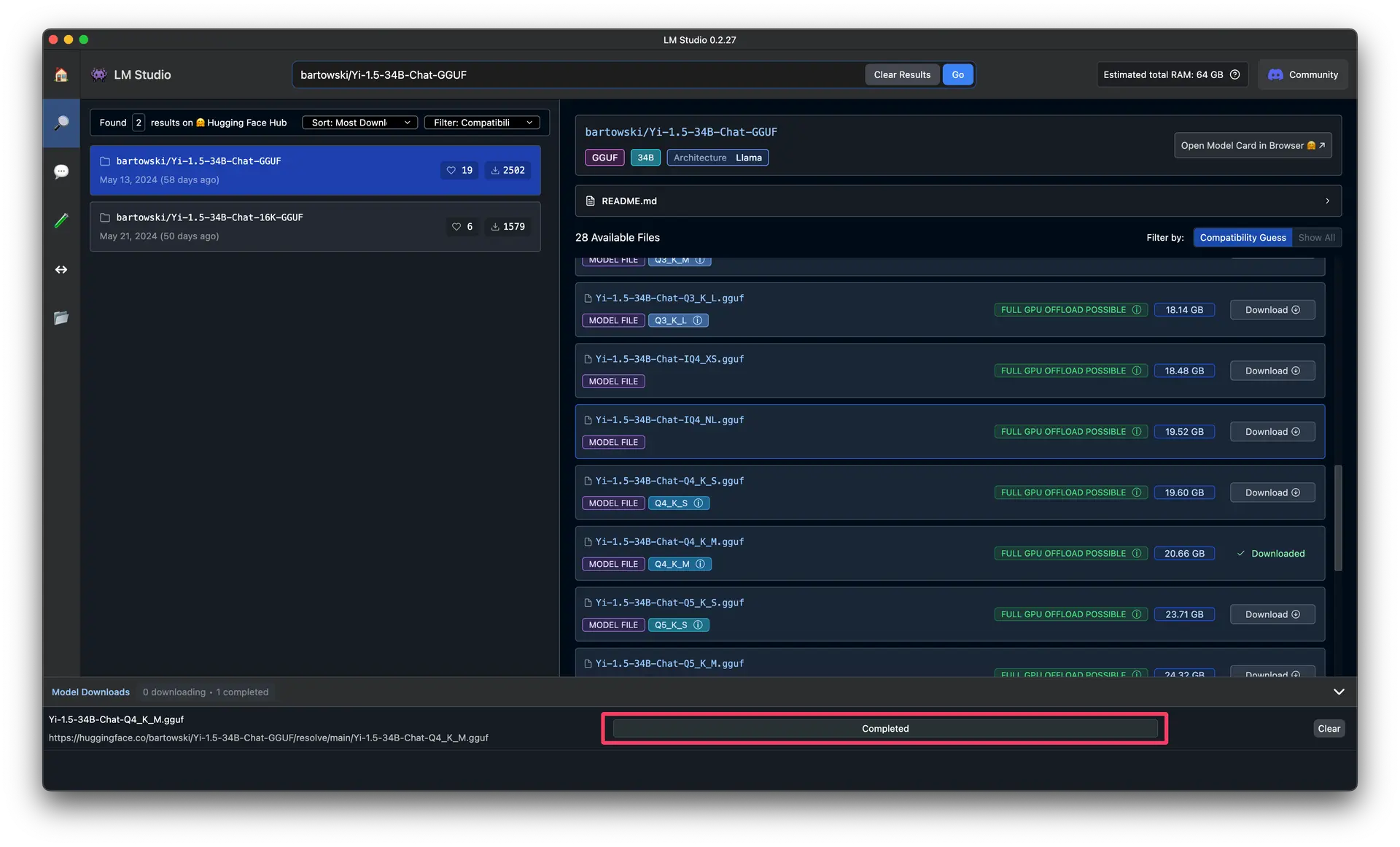

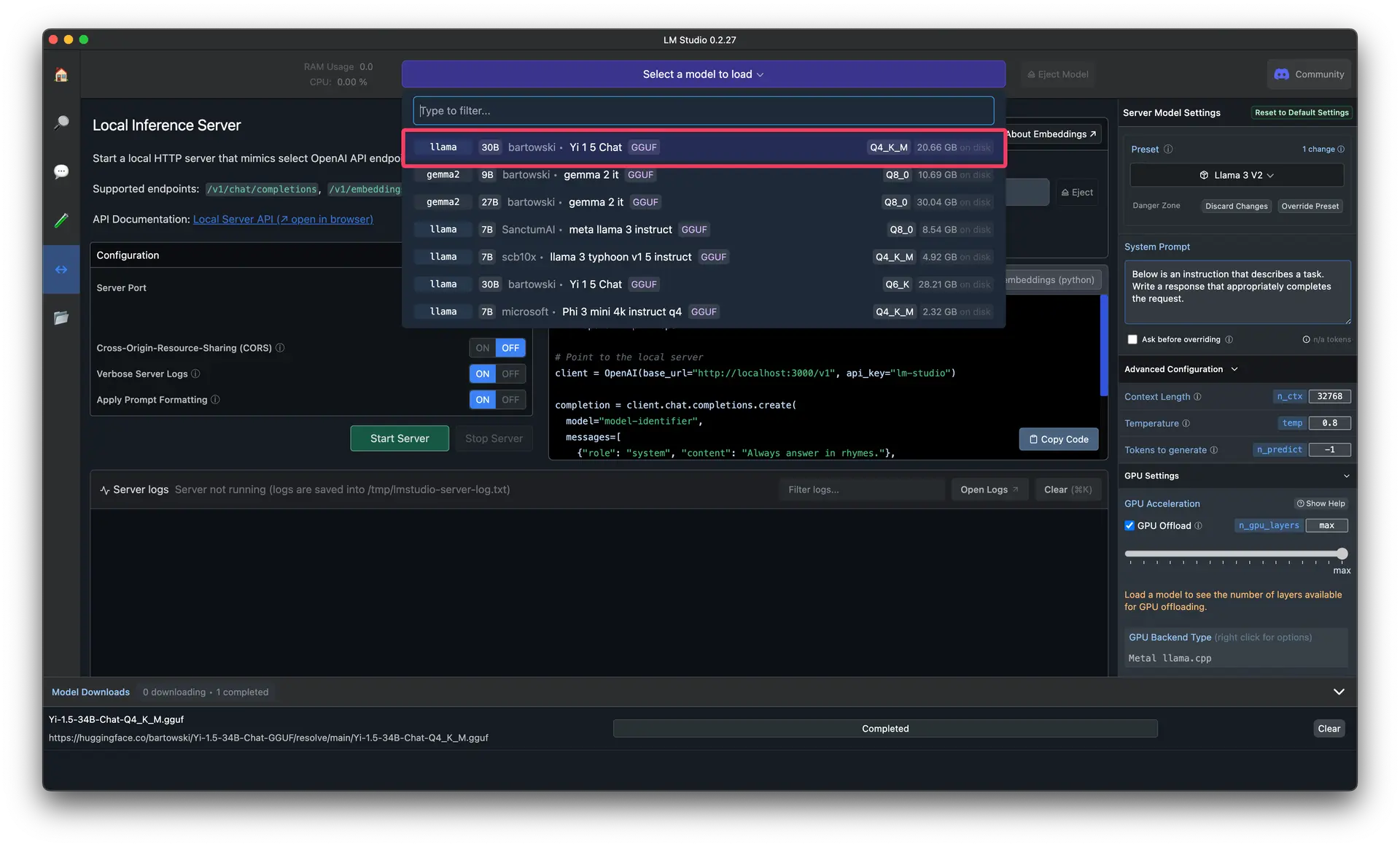

- Download one of the following large language models from the "Search" page:

- If you have VRAM > 32GB:

bartowski/Yi-1.5-34B-Chat-GGUF(Q4_K_M) - If you have VRAM >= 8GB:

lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF(Q8_0) - The rest:

bartowski/Phi-3.1-mini-4k-instruct-GGUF(Q8_0_L)

- If you have VRAM > 32GB:

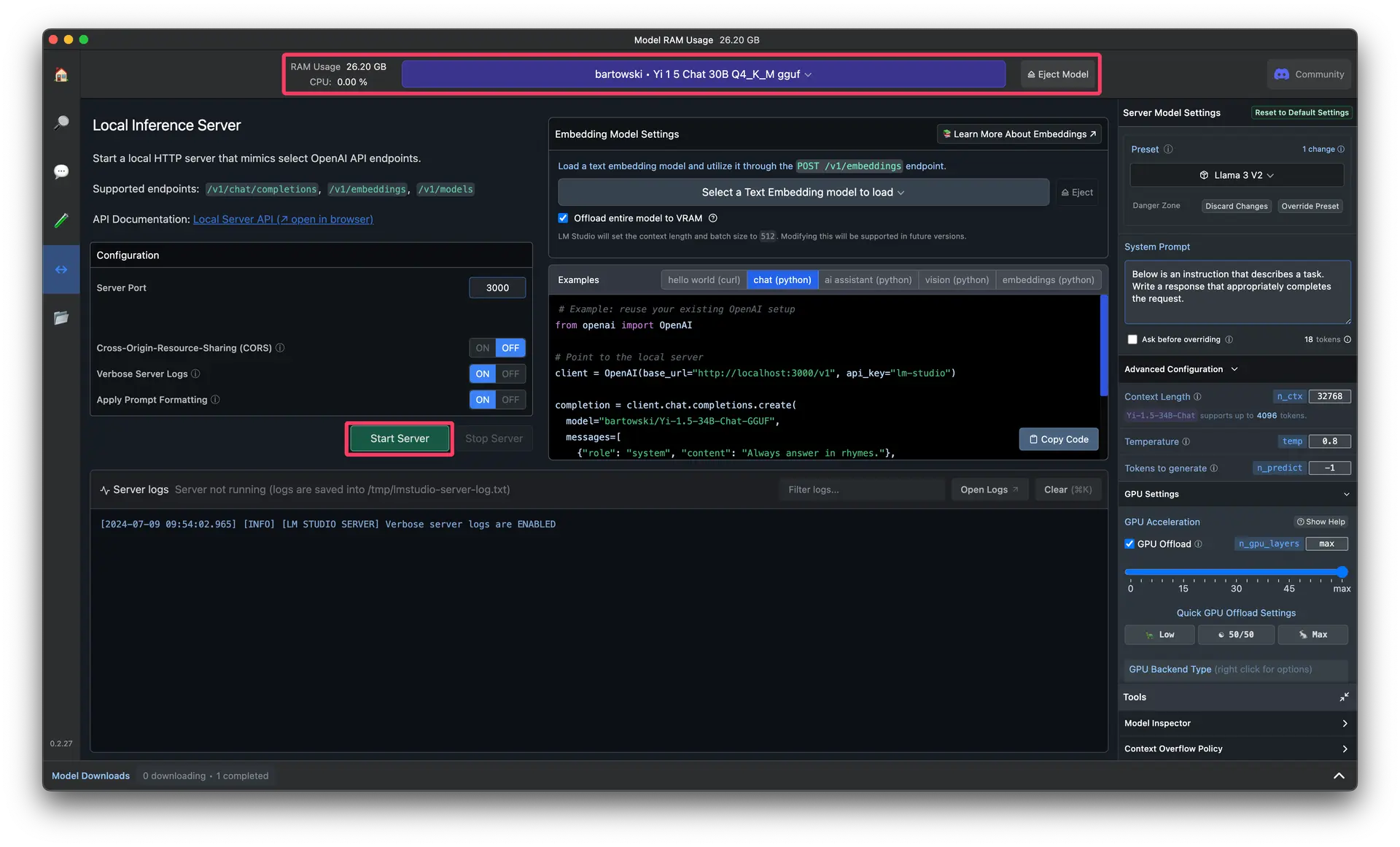

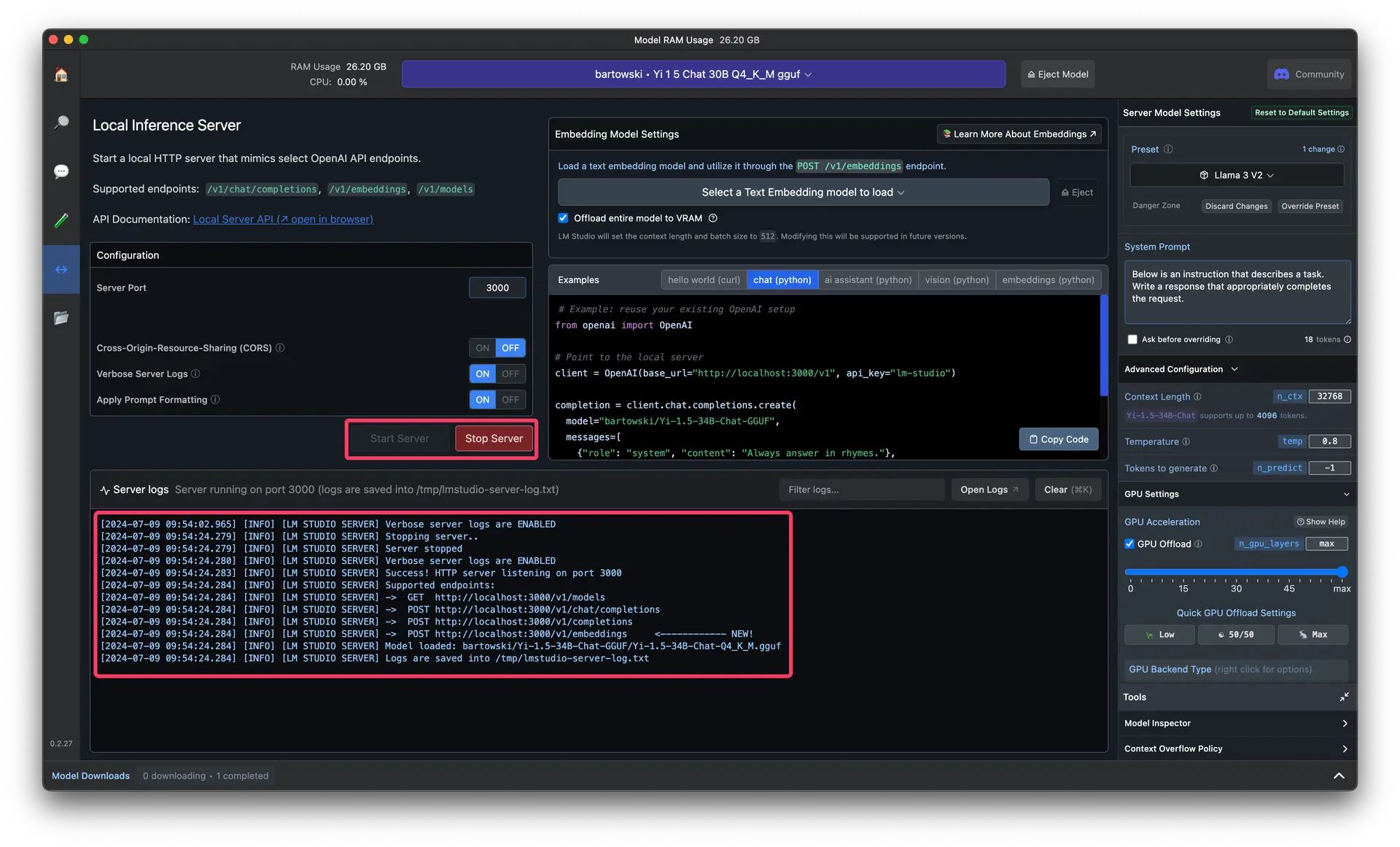

- Once the model is downloaded, navigate to the "Local Server" tab, select the model, and click "Start Server".

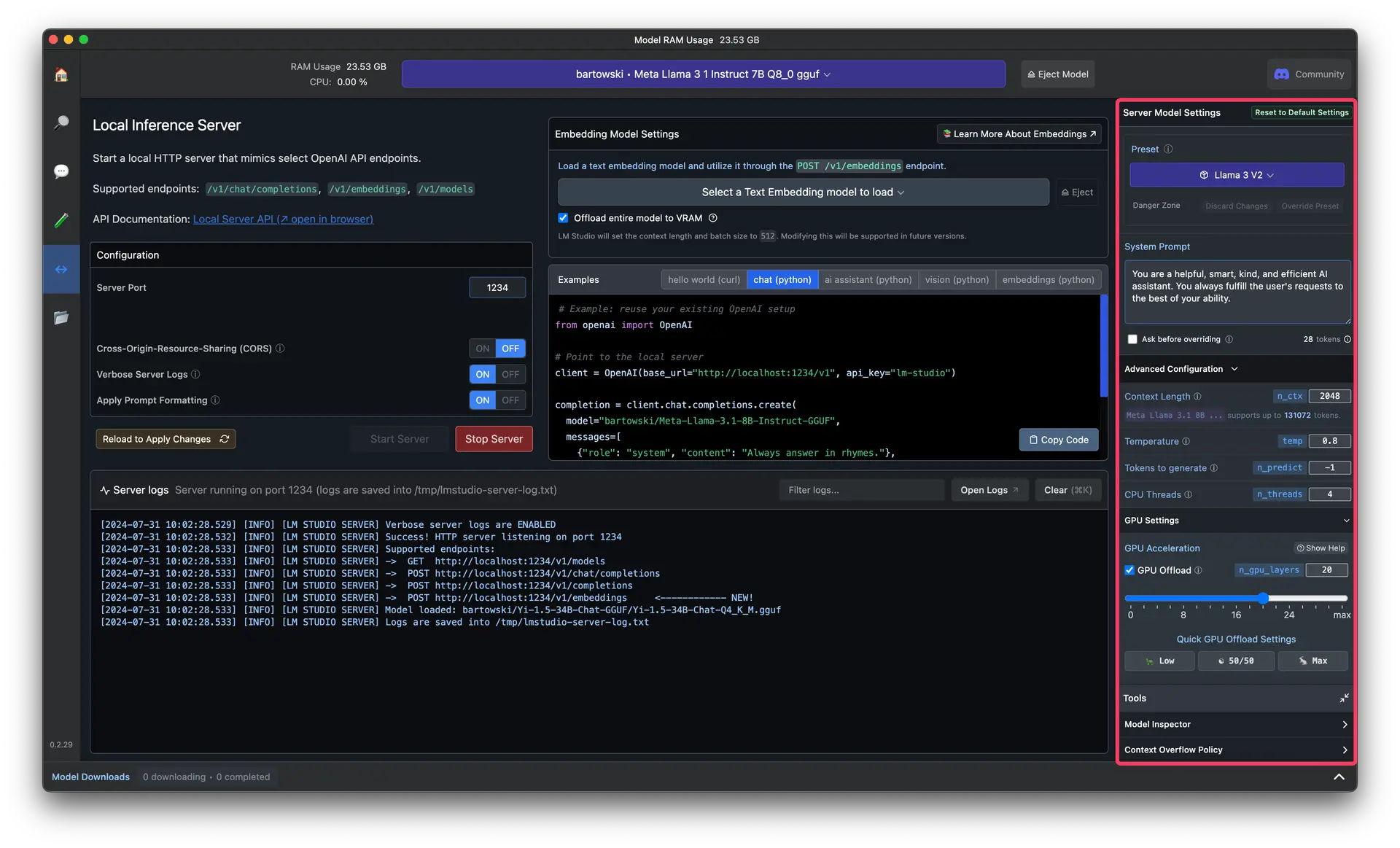

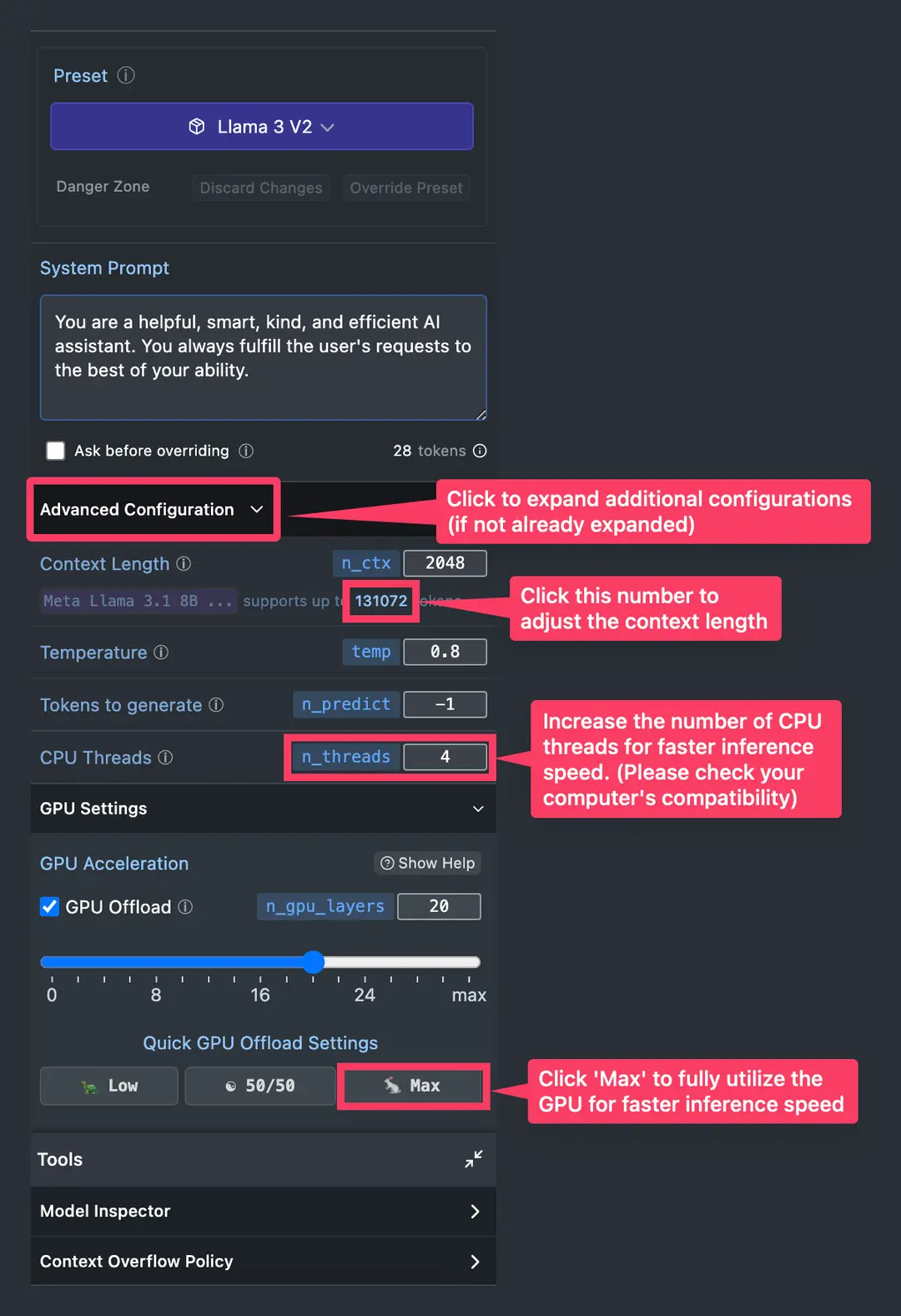

(Optional) Additional Configurations

- You may want to configure the following settings for faster inference speed. However, please note that these settings are subject to compatibility with the computer's hardware.

Starting Code

Starting code can be found at this GitHub repository.

Additional instruction on how to run the code can be found in theREADME.md file of the repository.